Mental Models for People Who Actually Want to Think

Models for clarity, calibration, and non-performative growth

You don’t need to be smarter than everyone. You just need a better way of seeing.

Because most people aren’t thinking—they’re repeating. They’re performing. They’re stacking conclusions on top of assumptions they’ve never examined, then defending them like gospel.

The result? A culture full of noise and narratives, with very little signal.

That’s where mental models come in.

A mental model is just a way of understanding how something works. It’s a simplified version of reality that helps you make decisions, solve problems, or spot bullshit faster.

Think of them like lenses or internal frameworks—rules of thumb that help you cut through complexity. You can’t see everything clearly all the time, but with the right models, you can get closer.

Some are structural:

First Principles helps you break things down to what’s undeniably true.

The 80/20 Rule reminds you that most outputs come from a small % of inputs.

Inversion asks you to look at a problem backwards: instead of “how do I win?” ask “how do I guarantee failure?”

Some are social:

Hanlon’s Razor tells you not to attribute to malice what can be explained by stupidity.

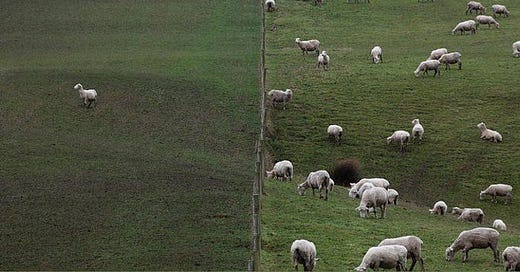

The Map Is Not the Territory reminds you that just because something sounds good on paper doesn’t mean it works in practice.

Good models improve your thinking and upgrade your clarity. Bad ones just amplify your bias.

The danger is: most people don’t even realize which models they’re running. They inherit them. They absorb them through culture, school, social media. And once those beliefs harden into identity, it’s almost impossible to update.

That’s why identity politics are so dangerous—not just socially, but cognitively.

Once your beliefs become who you are, you stop being able to think. Every disagreement feels personal. Every challenge feels like an attack. You’re no longer adjusting your model to fit reality—you’re adjusting reality to protect the model.

If you want to stay sharp, you have to decouple your identity from your operating system. You have to be able to say: I believed that. It no longer fits. I’m changing my mind.

Most people can’t. Not because they’re not smart—but because their ego won’t let them.

But if you want to build something real—clarity, systems, judgment—you have to think for yourself. Which means you need better tools.

This essay is a breakdown of the mental models that actually help you think—so you can reason clearly, ask better questions, and operate with less friction.

The first rule of clear thinking is to stop treating your ideas like sacred objects.

Because they’re not. They’re tools. And the faster you learn to break them, the better they get.

This is the foundation of Karl Popper’s philosophy. Popper was a 20th-century philosopher of science—arguably one of the most influential of all time. He challenged the old idea that science advances through confirmation. His view, rather, was that real science doesn’t prove ideas right. It tries to prove them wrong. And the ideas that survive repeated attempts to kill them—those are the ones worth keeping. But only until something better comes along.

He called this falsifiability: if a theory can’t be tested or disproven, it doesn’t belong in science. It belongs in belief.

This was a shift. Popper reframed science not as a pile of facts, but as a process. A method for error correction. A system built not on certainty, but on constant self-revision.

That’s the model. And it doesn’t just apply to labs and whiteboards—it applies everywhere. To how we build, how we lead, how we run our internal logic.

Ray Dalio applied this principle to finance.

At Bridgewater, decisions are stress-tested like hypotheses. Meetings are recorded. Ideas are rated. Mistakes are flagged without ego. Not because it’s nice—but because it’s efficient. Because falsifiability scales.

If you missed that breakdown, I wrote more about it here:

What Popper and Dalio both understood is this: you don’t get smarter by being right. You get smarter by systematically noticing when you’re wrong—and fixing it fast.

It’s not glamorous. It’s not performative. But it’s real.

And that’s the difference between someone who grows and someone who performs: one treats ideas like tools. The other treats them like extensions of the self.

The danger of the latter is obvious: if you’re too attached to a model, you can’t update it. And if you can’t update it, you stagnate. You stop learning. You get louder, not sharper.

Popper’s mental model is the antidote to all that: treat everything you believe as potentially wrong. Try to break it. If it survives, great. If it doesn’t, update and move on.

That’s how science evolves.

That’s how minds evolve.

That’s how anything evolves that actually wants to stay in contact with reality.

Most people chase clever.

It’s performative. It’s rewarded. It feels good to dazzle someone with your sharp take or obscure reference. But clever is fragile. It relies on constant novelty. It needs the room to be impressed.

And worst of all: cleverness often hides stupidity.

Charlie Munger, the longtime partner of Warren Buffett, didn’t care about clever. He cared about clarity. His core model was simple: invert. Always invert.

Instead of asking, “How do I succeed?”—ask, “How do I guarantee failure?” Then avoid that.

Instead of chasing brilliance, focus on removing stupidity.

It sounds too simple to work. But that’s the point. Munger’s genius wasn’t in having exotic answers. It was in asking sharper questions.

“It is remarkable how much long-term advantage people like us have gotten by trying to be consistently not stupid, instead of trying to be very intelligent.” —Charlie Munger

This is the exact opposite of how most institutions work. In academia, business, and media, the incentive is to say something impressive. To show how much you know. But Munger knew that most progress comes not from what you know—but from what you stop doing.

This is why inversion is such a powerful tool. It forces you to look at the edges. The weak links. The contradictions.

Want to make better decisions? Ask how to make terrible ones.

Want to build a trusted brand? List the ways to destroy trust.

Want to stay sharp over decades? Identify what dulls the mind—and avoid it like a toxin.

Munger collected mental models the way others collect status.

From biology, psychology, engineering, statistics—he pulled from dozens of disciplines. Because the more lenses you have, the less likely you are to miss the obvious.

But his core model was always the same: simplify. Invert. Avoid the dumb stuff.

You don’t need to be a genius to win. You just need to avoid being a consistent idiot.

First Principles, the 5 Whys, Hanlon’s Razor, and other non-performative clarity tools

Keep reading with a 7-day free trial

Subscribe to wild bare thoughts to keep reading this post and get 7 days of free access to the full post archives.